Compressive sensing - the most magical of signal processing.

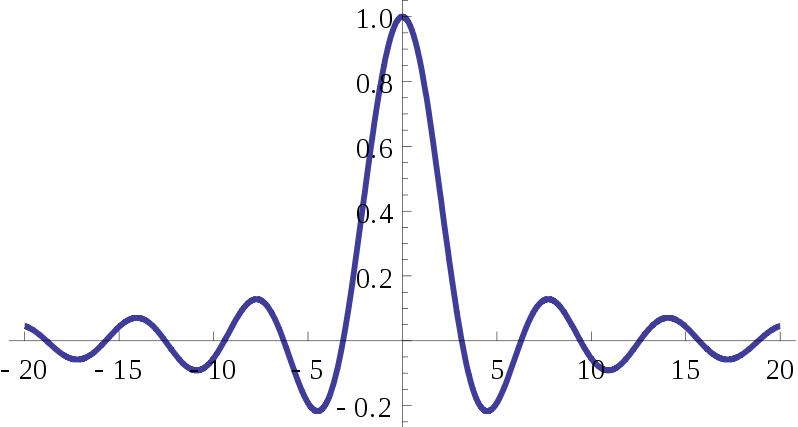

learn in undergraduate studies about the Nyquist rate, sampling theorem. At least some of us might have felt that it is a bit of an overkill. Why do we always use twice the frequency of the signal for sampling? Cant, we make use of some of the innate patterns of the signal and reduce the sampling frequency? In the case of Nyquist sampling, we reconstruct the signal using sinc functions. What if we have some other set of functions for reconstruction?

Compressive sensing techniques actually formalize this to help minimize the number of sampling points required considerably. The key idea is that we already know in some basis the signal is very sparse! In a very practical point of view, we know there is some matrix with which you multiply a sparse vector we get the signal with very high accuracy. Then, we can solve an optimization problem which solves both the reconstruction and sparsity of the coefficients such that we get the signal back.

In nutshell, the idea can be explained in the following three steps(Subject to conditions which I don't explain)

$$ \textbf{s} = \textbf{M}\textbf{x}\\ $$ $$ \textbf{x} =\textbf{B} \textbf{c} $$ We know the $$\textbf{B}$$ in which $$\textbf{c}$$ is sparse. Find $$\textbf{c}$$ such that $$\textbf{s} =\textbf{MBc}$$, and $$\textbf{c}$$ is sparse.A tutorial is given in my paper

Further reading: Orthogonal matching pursuit.

Posts

-

Eigenvalues and poles

-

Back Prop Algorithm - What remains constant in derivatives

-

Wordpress to Jekyll Conversion

-

Phase functions

-

Solving Dynamical Systems in Javascript

-

Javascript on markdown file

-

Walking data

-

Walking, it is complicated

-

PRC

-

Isochrone

-

Walking, it's complicated

-

Newtons iteration as a map - Part 2

-

Newton's iteration as map - Part 1

-

ChooseRight

-

Mathematica for machine learning - Learning a map

-

Prediction and Detection, A Note

-

Why we walk ?

-

The equations that fall in love!

-

Oru cbi diarykkuripp(ഒരു സിബിഐ ഡയറിക്കുറിപ്പ്)

-

A way to detect your stress levels!!

-

In search of the cause in motor control

-

Compressive sensing - the most magical of signal processing.

-

Machine Learning using python in 5 lines

-

Can we measure blood pressure from radial artery pulse?

subscribe via RSS